Blog

Yonatan Keller

The “Agentic Trojan Horse” Debate: How OpenClaw’s Convenience Model Opens Real-World Attack Paths

February 17, 2026

Imagine a world where an AI agent spots a zero-day and prevents its exploitation before it ever becomes public or weaponized. In July 2025, that became reality when Google’s autonomous AI agent Big Sleep achieved a historic breakthrough by detecting and preventing imminent exploitation of a zero‑day vulnerability in SQLite. Leveraging a few artefacts indicating some uncharacterized threat activity (and provided by a human threat research team), Big Sleep successfully synthesized internal behavioral telemetry with external threat intelligence to discover the vulnerability, raise an alert and trigger defensive actions. This marks the first known incident of an AI agent stepping in proactively — not merely identifying a bug, but mobilizing real-time mitigation before adversaries struck.

The Big Sleep example underscores that we are no longer in theoretical implications of AI, but in an all-out race between defenders and attackers. Both sides are racing to grasp generative AI’s power to either discover and close vulnerabilities or exploit them at speed. The problem is that these capabilities are inherently dual‑use: the same model pipelines and techniques that can detect or mitigate a vulnerability can also be used to craft exploit code or accelerate attacks. As defenders train AI to uncover hidden flaws and deploy automated controls, attackers can leverage identical LLM infrastructures to scale reconnaissance, weaponize exploits, or evade detection.

The introduction of GenAI into the ecosystem of vulnerability exploitation represents indeed a sea change in the speed, scale, and accessibility of exploitation. There will be MORE ATTACKERS, as AI is dramatically lowering the barriers to entry into the vulnerability exploitation market; MORE VULNERABILITIES, as AI-based vulnerability detection systems will drive the number of identified flaws to unprecedented levels; and exploitation will be much FASTER, as future AI-driven attackers might exploit flaws within minutes after the release of a new software version.

This quantitative change, turning into a qualitative one, reshapes every stage of the attack lifecycle: vulnerability exploration is machine‑driven; network reconnaissance and vulnerability scanning become automated discovery; exploit code generation is LLM‑based; and LLMs also help with privilege escalation. Lateral movement, and sophisticated evasion.

Various research findings indicate that this significant shift toward automating vulnerability exploitation through generative AI technologies is about to happen.

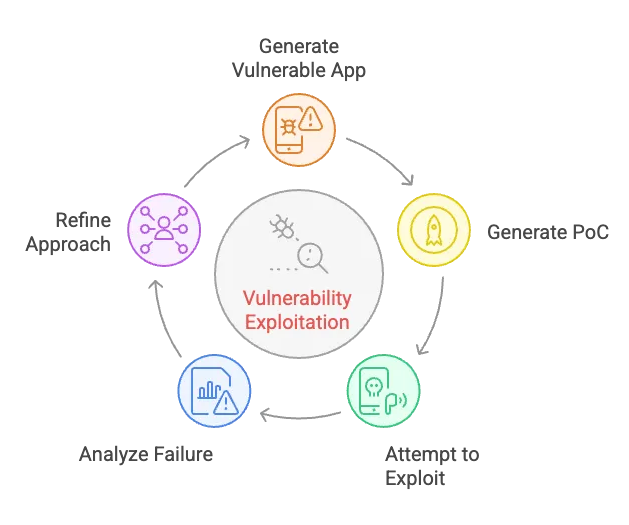

For example, an experiment showed that GPT‑4, when given only NIST CVE descriptions, successfully exploited 87% of a test set of 15 real-world one‑day vulnerabilities1. Similarly, two Israeli researchers demonstrated an AI system that daily ingests fresh CVE advisories and patches, generates exploit code, spins up a matching test environment, and validates a working PoC — typically in about 10–15 minutes and for roughly $1 per exploit2.

In the zero‑day domain, besides Big Sleep’s successes, a security researcher showed how LLMs helped him analyze the patch diff for an Erlang/OTP SSH RCE vulnerability, localized the flaw, and produced a working exploit before any public PoC existed3. Additionally, the hierarchical multi‑agent system HPTSA coordinated multiple GPT‑4‑based agents to discover and exploit eight out of fifteen vulnerabilities4.

Other experiments demonstrated how LLMs generated ten thousand variants of malicious JavaScript code, 88% of which evaded detection by EDR systems, revealing that AI can support detection bypass at scale. Or another experimentally demonstrated and innovative use of AI to bypass defense systems: adversarial techniques to inflate public exposure of particular CVEs in order to manipulate EPSS scorings, forcing organizations to de-prioritize significant vulnerabilities5.

In real life too, generative AI has already become part of the exploitation game. GenAI-driven social engineering campaigns, like those enabling advanced vishing attacks once thought unthinkable, have already been widely discussed. But now, AI is also becoming part of the offensive vulnerability exploitation toolset. For example:

So what can be done?

First, threat detection and telemetry must evolve to meet the new landscape. AI-powered attacks don’t always look like traditional intrusions. Behavioral analytics, anomaly detection, and attack path modeling need to be upgraded to reason across multiple layers and functionalities.

The second step is accepting that speed is now a permanent condition. Defenders must match automation with automation, speed with speed. Exposure management can no longer rely solely on periodic scans or static CVE feeds — it needs to become continuous, contextual, and AI-enhanced. That means investing in AI tools that do more than label vulnerabilities - but triage, correlate, prioritize, and evaluate levels of true exploitability. suggest mitigations based on real-world threat intelligence and asset criticality.

Lastly, effective remediation at scale demands AI-native workflows. Automated patch planning, configuration hardening, and real-time mitigation recommendations can not only reduce mean time to response but also enable smarter and more consistent defensive actions against exploitation attempts. To truly deliver value, however, these capabilities must be embedded into existing operational ecosystems—integrating seamlessly with ticketing systems, orchestration tools, and other security products already in the response pipeline. It’s not enough to use AI for analytics and prioritization; organizations must also invest in AI systems that can generate actionable insights and translate them into concrete, system-ready measures—closing the loop between detection and action.

Traditional vulnerability management must change. So many are drowning in detections, and still lack insights. The time-to-exploit window sits at 5 days. Implementing a Continuous Threat Exposure Management (CTEM) program is the path forward. Moving from vulnerability management to CTEM doesn't have to be complicated. This guide outlines steps you can take to begin, continue, or refine your CTEM journey.