ChainLeak: Critical AI framework vulnerabilities expose datgga,enable cloud takeover

As part of this research, Zafran launches Project DarkSide: an initiative that exposes the hidden weaknesses in AI application frameworks that can be weaponized into critical attacks.

Introduction

Zafran’s research team identified two critical vulnerabilities in Chainlit, a widely used open source AI framework. These vulnerabilities affect internet-facing AI systems that are actively deployed across multiple industries, including large enterprises. The flaws allow attackers to leak cloud environment API keys and steal sensitive files (CVE-2026-22218), as well as perform Server-Side Request Forgery (SSRF) against servers hosting AI applications (CVE-2026-22219). These vulnerabilities can be triggered with no user interaction. Zafran confirmed the vulnerabilities in real world, internet-facing applications operated by major enterprises.

This discovery marks the launch of Project DarkSide, an ongoing research initiative focused on uncovering the dark side in the building blocks of AI applications.

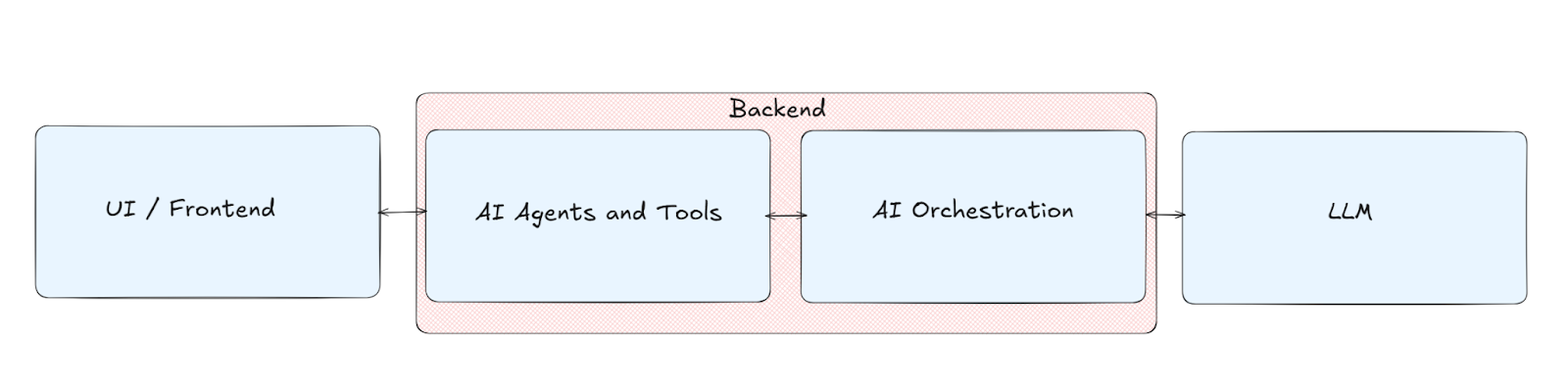

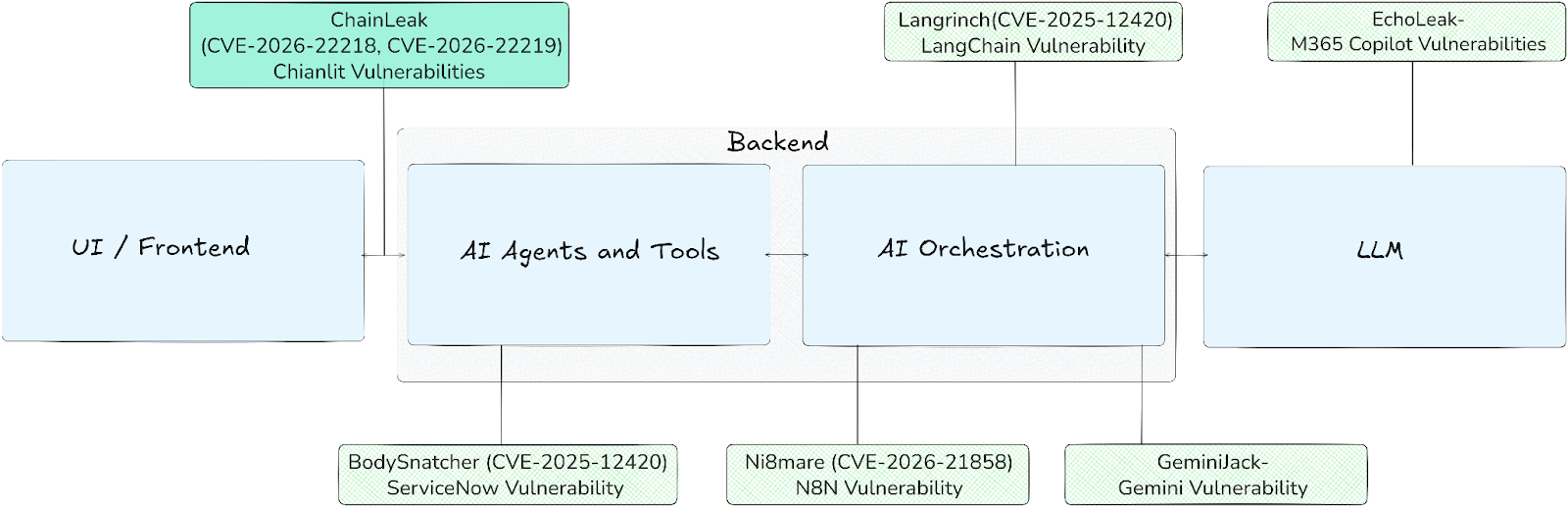

Zafran’s research highlights the real risks that enterprises building AI systems face. As organizations rapidly adopt AI frameworks and third-party components, long-standing classes of software vulnerabilities are being embedded directly into AI infrastructure. These frameworks introduce new and often poorly understood attack surfaces, where well-known vulnerability classes can directly compromise AI-powered systems. The most common AI applications include at least four core building blocks (UI / Frontend, AI Agents & Tools, AI Orchestration, and the LLM), and the way these layers are chained together introduces weaknesses arising from their interdependencies.

The Architecture Behind AI Applications

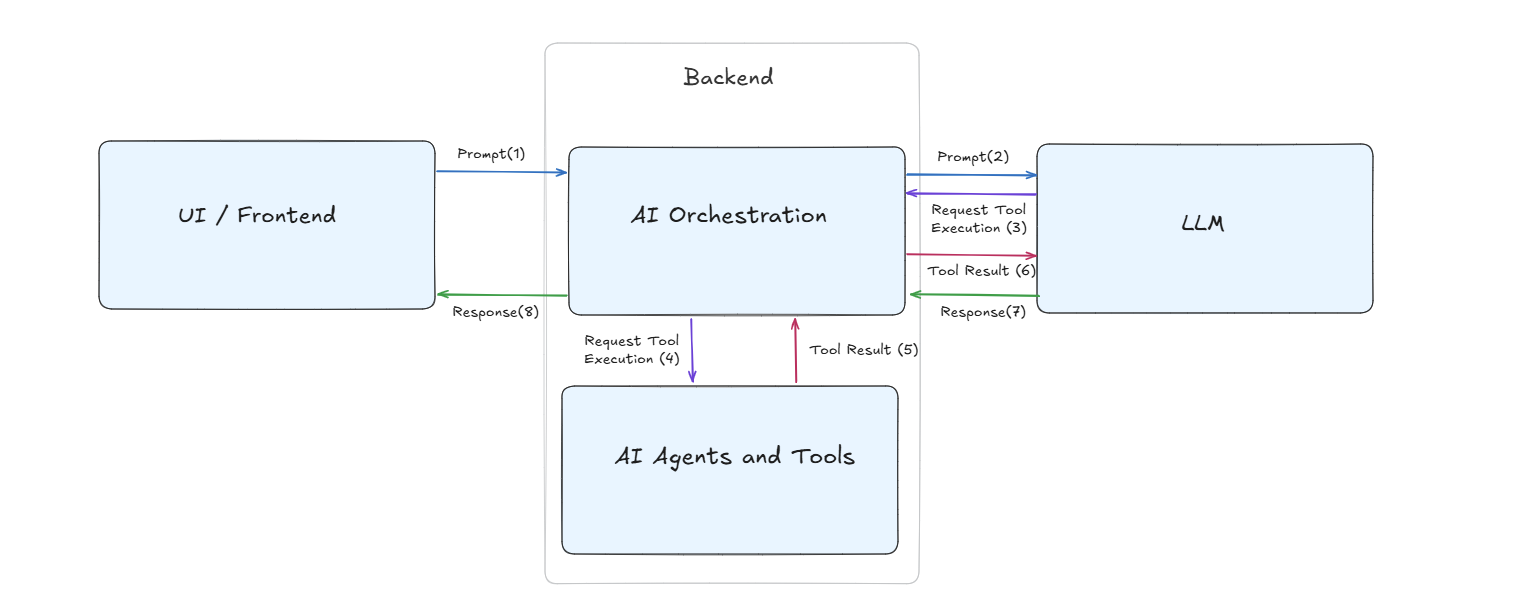

AI applications are composed of multiple architectural layers. Each layer is responsible for receiving user input, transforming it, and communicating the result to downstream components. Modern AI systems combine multiple frameworks, third-party services, and proprietary components that work together to deliver the AI experience seen by end users.

Based on our analysis, most AI systems contain the following four layers:

- UI / Frontend

- AI Agents & Tools

- AI Orchestration

- LLM (Large Language Model)

UI / Frontend

The client layer, which often uses frameworks such as Chainlit or custom dashboards, handles user interaction, authentication, and session management. This is where users submit prompts, upload data, and view model outputs. In multi-tenant deployments, the UI layer is responsible for isolating user contexts and enforcing authorization boundaries. Although it appears simple on the surface, the UI is a crucial part of the system since it serves as the entry point for all downstream model operations.

Backend - AI Agents and Tools / AI Orchestration

The backend of the AI application is the core of the system. It contains the most complex data handling and connects all the different parts of the system, including proprietary code and logic. This layer is also where enterprises integrate proprietary and sensitive data sources into the AI application.

This part includes both the communication and orchestration with the LLM and the custom tools and agents written by the enterprise. In practice, this layer is often implemented using frameworks and platforms such as LangChain, Strands Agents, Google Vertex AI SDK, and Microsoft Azure AI Foundry, alongside proprietary in-house orchestration stacks. This backend is typically connected to cloud services and LLM gateways such as AWS Bedrock, GCP Vertex AI, and other managed or self-hosted model access layers.

LLM

The LLM layer is the foundational model capability within the AI system. It is responsible for language understanding, reasoning, and generation, but it does not operate independently. In a production architecture, the LLM is accessed through backend services rather than directly from the frontend. Tool execution requests and prompts handled by the LLM are first passed through the orchestration layer. While tool execution requests may be initiated by the LLM, the actual execution occurs within the backend server’s security context.

Chainlit

Chainlit is an easy-to-use framework for building conversational AI applications and has seen strong adoption across the Python and AI developer ecosystem for LLM and agentic applications.

Chainlit provides both the user interface and parts of the backend for AI applications, and integrates with popular frameworks and services such as LangChain, OpenAI, AWS Bedrock, and Llama. It also includes built-in support for authentication, cloud deployment, and telemetry, making it suitable for everything from simple prototypes to production-grade AI applications.

Who uses Chainlit?

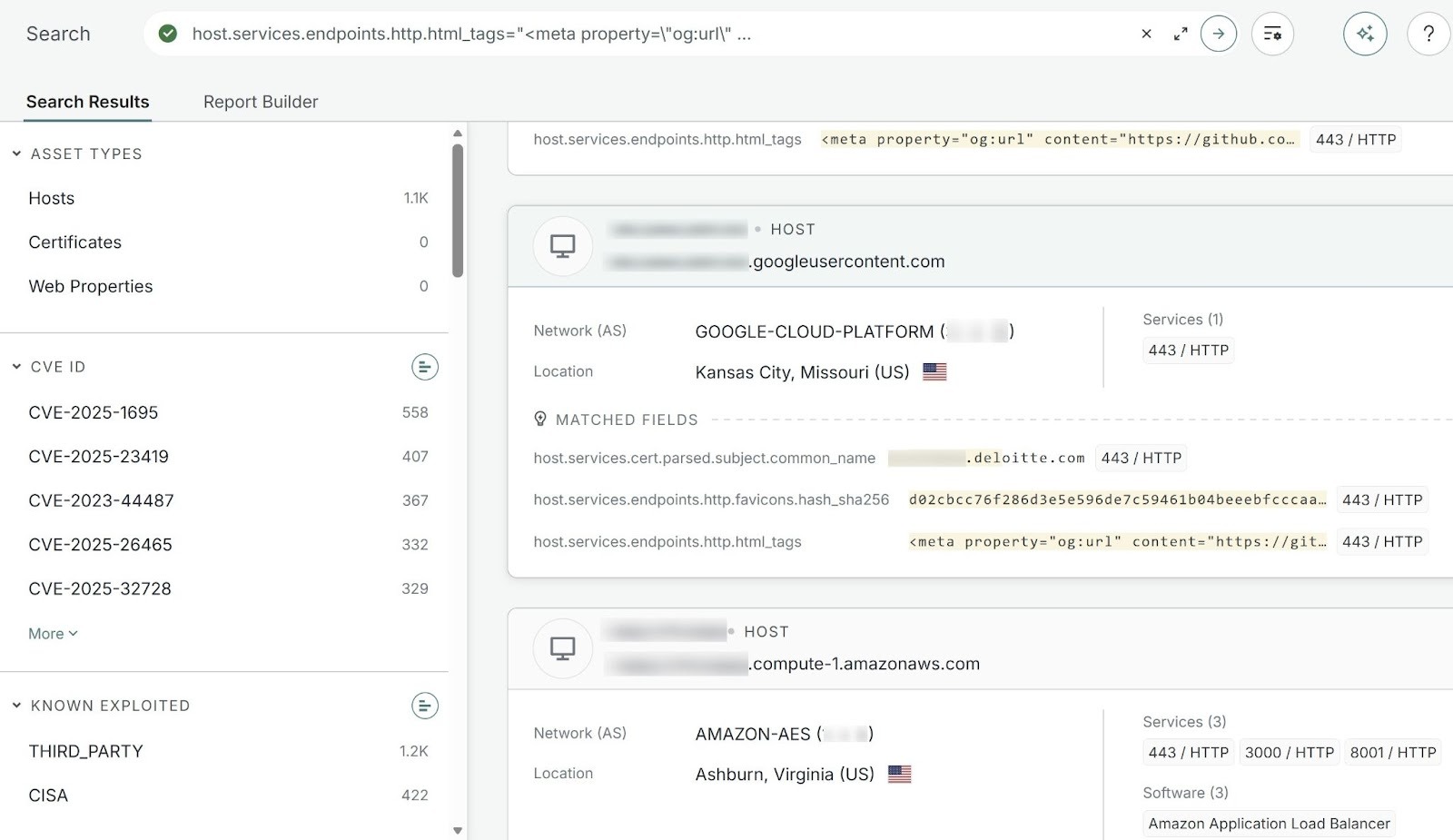

On PyPI, Chainlit averages approximately 700,000 downloads per month, totaling more than 5 million downloads over the past year. The framework is also referenced in technical blogs such as NVIDIA’s engineering blog and Microsoft Blog.

During our survey of publicly accessible systems, we identified multiple internet-facing Chainlit servers, including instances operated by large enterprises and deployments associated with academic institutions.

CVE-2026-22218: Arbitrary File Read

Chainlit Elements

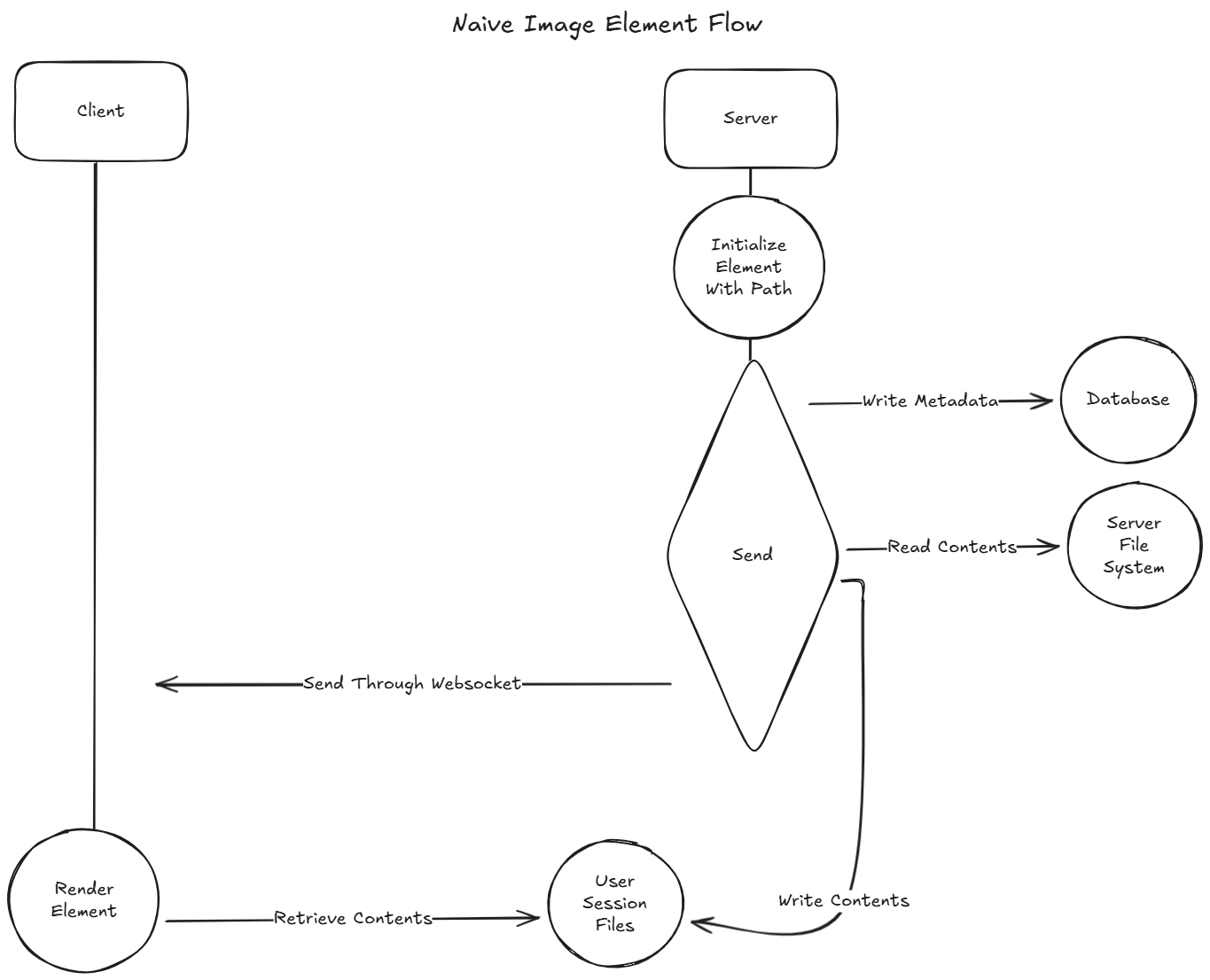

In Chainlit, elements are pieces of content that can be attached to a message. They may be a PDF, file, image, etc.

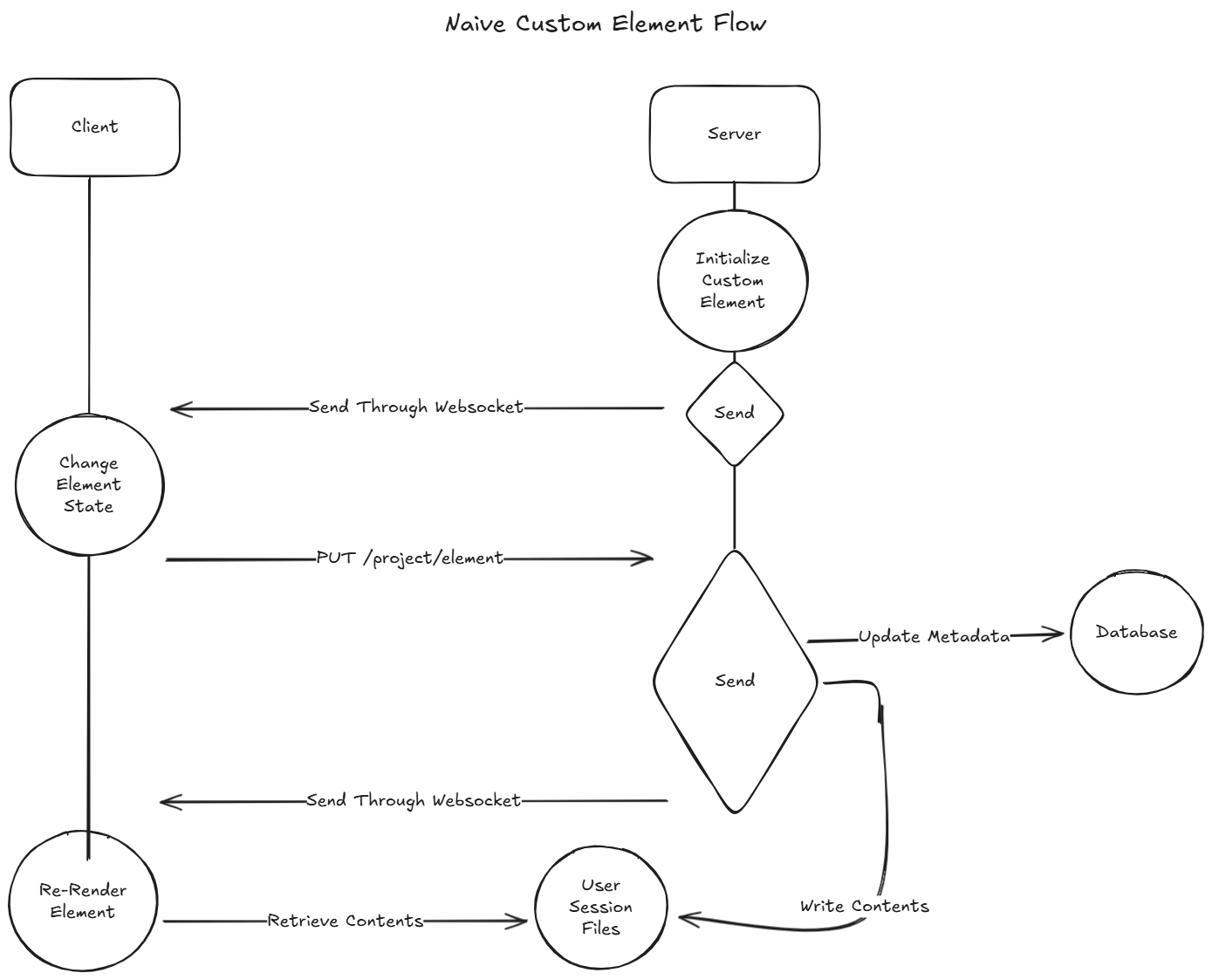

Whenever an element is sent as part of a message to the client, the Element class’s send function is called.

Its flow contains the following actions:

- Store the element metadata & state in the database.

- Write the element’s contents to a file accessible by the user through the API. This has two modes:

- Elements can be initialized with a path on the server. If the path property is set, the element’s contents will be copied from this path. Image elements, for example, use this flow.

- Otherwise, the element’s content property will be written.

- This can be seen in the following code snippet, which has been truncated for clarity. This function is called during send execution; path and content are the element’s properties.

class BaseSession:

async def persist_file:

# ...

if path:

# Copy the file from the given path

async with (

aiofiles.open(path, "rb") as src,

aiofiles.open(file_path, "wb") as dst,

):

await dst.write(await src.read())

elif content:

# Write the provided content to the file

async with aiofiles.open(file_path, "wb") as buffer:

if isinstance(content, str):

content = content.encode("utf-8")

await buffer.write(content)

# ...

- Send the element struct, without its content, through a websocket connection back to the client. This constructed element now contains an identifier of the relevant file.

Custom Elements

An element type of note is the custom type. It allows a developer to render a .jsx snippet on the client as part of a message. It can even be used to capture customizable input from the user. Chainlit exposes several APIs to this web element, which allow it to manage a persistent state in the system’s backend.

Persisting a custom element’s state is done with an HTTP request. After checking the element’s type is custom, the request payload is deserialized into the Element class. Then, the generic Element’s send function is called.

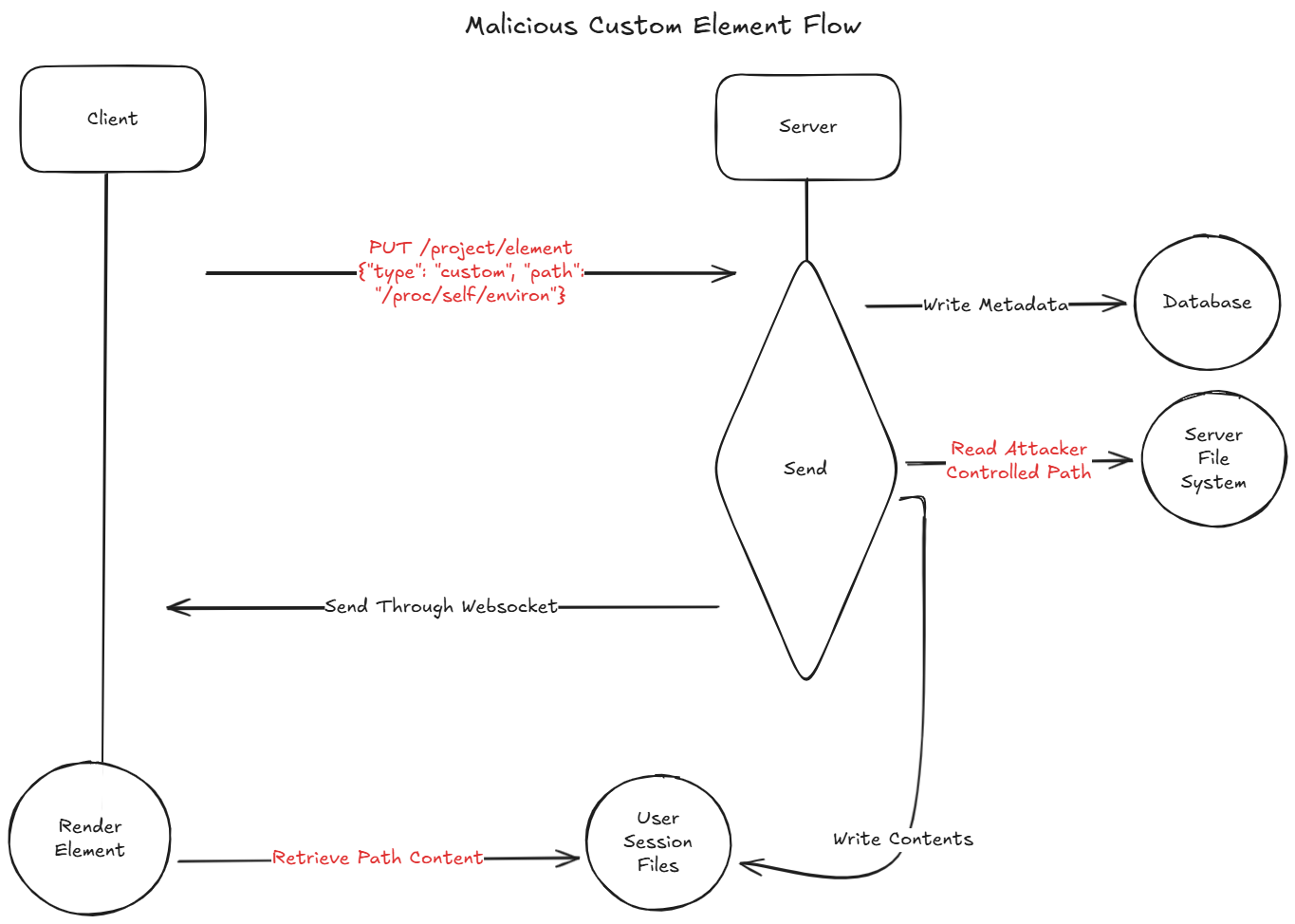

The Vulnerability

The /project/element endpoint allows an attacker to send an element with complete control over its fields. While the code does validate that the element type is custom, it does not validate any of the other properties.

@router.put("/project/element")

async def update_thread_element:

# ...

if element_dict["type"] != "custom":

return {"success": False}

# ...

element = Element.from_dict(element_dict)

# ...

await element.update()

return {"success": True}

If the ‘path’ property of the element is set, it will always be copied to our user session files, regardless of the element type. This allows an authenticated attacker to copy the content of any file accessible to the Chainlit server into their own session.

The attacker can then copy the element_id sent by the server and use a curl command and read any file accessible to the Chainlit on the server, resulting in arbitrary file read.

CVE-2026-22219: SSRF

Data Layers & Storage Providers

Chainlit provides several options for its data layer, which is responsible for data persistence. The official implementation uses PostgreSQL, but alternative backends based on DynamoDB and SQLAlchemy are also available. Each option differs in its database schema and internal operational logic.

Data layers may also use a configured storage provider - S3, Azure Blobs or any implementation of the required API. If one exists, element contents will be saved there, and the server will send the client read URLs for those. In this case, the user session files mechanism will be acting as a fail-safe.

The Vulnerability

This vulnerability was found in SQLAlchemy data layer.

It is triggered in the same way as the arbitrary file read; sending a custom element with controlled properties. An element also has a url property, which allows creating elements on the server from remote resources. We can set it to our SSRF target.

Upon element creation, The SQLAlchemy layer will send a GET request to the specified url in order to fetch its contents, and write it to the storage provider.

class SQLAlchemyDataLayer(BaseDataLayer):

async def create_element(self, element):

# ...

content: Optional[Union[bytes, str]] = None

if element.path:

async with aiofiles.open(element.path, "rb") as f:

content = await f.read()

elif element.url:

async with aiohttp.ClientSession() as session:

async with session.get(element.url) as response:

if response.status == 200:

content = await response.read()

else:

content = None

elif element.content:

content = element.content

# ...

Note that in this case, the data won’t be accessible through user session files. In order to exfiltrate the response, we must retrieve the element from the /project/thread/{thread_id}/element/{element_id} endpoint - this will make the server generate a read URL to the contents.

Exploitation

The two Chainlit vulnerabilities can be combined in multiple ways to leak sensitive data, escalate privileges, and move laterally within the system. Once an attacker gains arbitrary file read access on the server, the AI application’s security quickly begins to collapse. What initially appears to be a contained flaw becomes direct access to the system’s most sensitive secrets and internal state.

Environment Variables

An attacker can use the arbitrary file read to exfiltrate environment variables by reading /proc/self/environ. These variables often contain highly sensitive values that the system and enterprise depend on, including API keys, credentials, internal file paths, internal IPs, and ports. This is mostly dangerous in AI systems where the servers have access to internal data of the company to provide tailored chatbot experience to their users.

In environments where authentication is enabled, variables such as CHAINLIT_AUTH_SECRET may be present. This secret is used to sign the authentication tokens. Given user identifiers, which can be obtained by leaking the database or inferred from organization emails an attacker can forge authentication tokens, and take over their accounts.

Environment variables may also include cloud credentials (for example, AWS_SECRET_KEY) required by Chainlit for cloud storage. These credentials may allow lateral movement within the surrounding cloud environment. Additionally, environment variables may contain sensitive API keys or the addresses and names of internal services (for example, the Postgres data layer expects a DATABASE_URL environment variable). These addresses can then be probed using the SSRF vulnerability to access sensitive data from internal REST APIs. Such identifiers may also be discovered by leaking /etc/hosts.

Chainlit Database

If the Chainlit setup uses SQLAlchemy with an SQLite backend as its data layer, the database file itself can be leaked. This database may contain all users, conversations, messages, and metadata sent to the application, exposing sensitive user-generated content and internal application state.

LangChain Cache

When Chainlit is used in conjunction with LangChain as the orchestration layer, it may be configured to use a cache file on disk. In this configuration, LangChain writes all prompts sent to the LLM and the corresponding responses to .chainlit/.langchain.db in the application directory.

This cache contains the prompts and responses storage of all users. Combined with the arbitrary file read vulnerability, it allows an attacker to leak prompts across tenants. In multi-tenant environments, this is particularly impactful: periodically reading this file can reveal all users prompts and responses over time. We validated this behavior and produced a POC demonstrating cross-user data leakage using this primitive.

Source Code Leak

An attacker may also leak the application source code, and specifically in the app.py file, from the Chainlit directory. Chainlit provides developers with extensive customization through callbacks and hooks. Access to the source code enables further vulnerability research in the proprietary defined callbacks, and helps identify additional attack paths or sensitive targets within the system.

Cloud Access and Lateral Movement

Once cloud credentials or IAM tokens are obtained from the server. With these credentials, the attacker is no longer limited to the application, they gain access to the cloud environment behind it. Storage buckets, secrets managers, LLM, internal data, and other cloud resources may become accessible to an attacker.

If Chainlit is deployed on an AWS EC2 instance with IMDSv1 enabled, the SSRF vulnerability can be used to access

http://169.254.169.254/latest/meta-data/iam/security-credentials/ and retrieve role endpoints, allowing lateral movement within the cloud account. (IMDSv2 is not accessible in this case, as the SSRF only supports GET requests with no ability to control headers.)

How do I Protect My System?

Chainlit has released a patched version of their framework addressing the discovered vulnerabilities (version 2.9.4). Impacted customers are advised to implement mitigations as soon as possible, and patch affected systems. Zafran has also developed a Snort signature that may be implied until systems are patched.

alert tcp $EXTERNAL_NET any -> $HTTP_SERVERS $HTTP_PORTS (

msg:"Chainleak Vulnerabilities Detection - PUT to /project/element";

flow:established,to_server;

content:"PUT"; http_method;

content:"/project/element"; http_uri; depth:16;

classtype:web-application-activity;

sid:100001; rev:1;

)

Project DarkSide: The Attack Surface Behind AI Systems

Before the widespread adoption of AI systems, application attack surfaces and entry points were relatively well defined. The building blocks of AI systems are more complex, and understanding their architecture is critical to identifying where untrusted data enters the system and where validation and security controls must be enforced. Traditionally, the primary threat originated from malicious users. In AI applications, however, the LLM itself can also act as an attack vector. In that context, there are two common scenarios that can lead to system compromise.

In the first scenario, the LLM model itself is tampered with. As described in an Antropic blog, even a small number of poisoned samples can influence an LLM and create a new entry point for an attacker.

In the second scenario, the untampered LLM generates malicious or unexpected outputs that are passed directly to backend services, either through injection-style attacks or by triggering privileged tool execution. As a result, the threat model for AI applications is broader than that of a traditional web application, where risk historically came primarily from users interacting with the system.

Recent research has increasingly focused on vulnerabilities in AI frameworks and infrastructure. As part of this broader effort, Zafran launched Project DarkSide to systematically identify and expose security risks across the different layers of AI application architectures.

In the following image, we map several recently disclosed vulnerabilities from multiple research teams to the specific layers of the AI application stack where they reside.

Project DarkSide: The Next Steps

While AI security discussions often focus on model-level issues such as prompt injection, the rapid adoption of third-party frameworks significantly expands the attack surface, allowing well-known vulnerability classes in access control, file handling, and network interactions to directly compromise AI infrastructure. Last year Salesforce had experienced a devastating incident, when Drift, a conversational AI marketing tool owned by Salesforce, was compromised through stolen OAuth tokens.

Zafran research is actively identifying additional vulnerabilities in widely used AI frameworks and working to secure the rapidly growing ecosystem of AI applications. Project DarkSide will continue to publish new findings as they emerge, and Zafran welcomes collaboration with additional partners in this area.

Disclosure Timeline

Report submitted to Chainlit - November 23, 2025

Acknowledged by Chainlit maintainers - December 9, 2025

Chainlit published patched version- December 24, 2025

CVE Assigned by VulnCheck- January 6, 2026

CVEs Published- January 19, 2026

Traditional vulnerability management must change. So many are drowning in detections, and still lack insights. The time-to-exploit window sits at 5 days. Implementing a Continuous Threat Exposure Management (CTEM) program is the path forward. Moving from vulnerability management to CTEM doesn't have to be complicated. This guide outlines steps you can take to begin, continue, or refine your CTEM journey.

Request a demo today and secure your organization’s digital infrastructure.

Request a demo today and secure your organization’s digital infrastructure.